HAMPTON ROADS, Va. (WAVY) — Manipulation. Propaganda. Misleading political messaging. They’re all possibilities — make that probabilities — with the current state-of-the-art artificial intelligence and its ability to create deepfakes.

The technology that can clone faces and voices will keep getting more effective, and as a local researcher warns, more pervasive. It’s the evolution of photoshopping, which seems like a steam locomotive to the bullet train of deepfakes.

Daniel Shin is a research scientist at the Center for Legal and Court Technology at William & Mary Law School. He says we’re getting into previously uncharted territory.

“The biggest fear is this: we are entering in a scenario where not a lot of science fiction writers have even contemplated,” he said.

Days after she announced she’s running for president, a social media video on X made it look like Kamala Harris was telling the world she’s unfit for office. It’s fake, but millions of people have already seen it after it was superspread by Elon Musk.

Deepfake photos of Trump have him wearing a prison jumpsuit or fighting with police outside a courthouse.

Shin says with the right software, and a laptop that has an advanced graphics card similar to those used for gaming, someone with nefarious intentions can start duping the public.

“These are publicly available tools,” he said, and the Harris deepfake could have been created in a matter of hours once those tools are in place.

Shin demonstrated to WAVY how one person’s face can be substituted for another, as well as one person’s voice substituted for another.

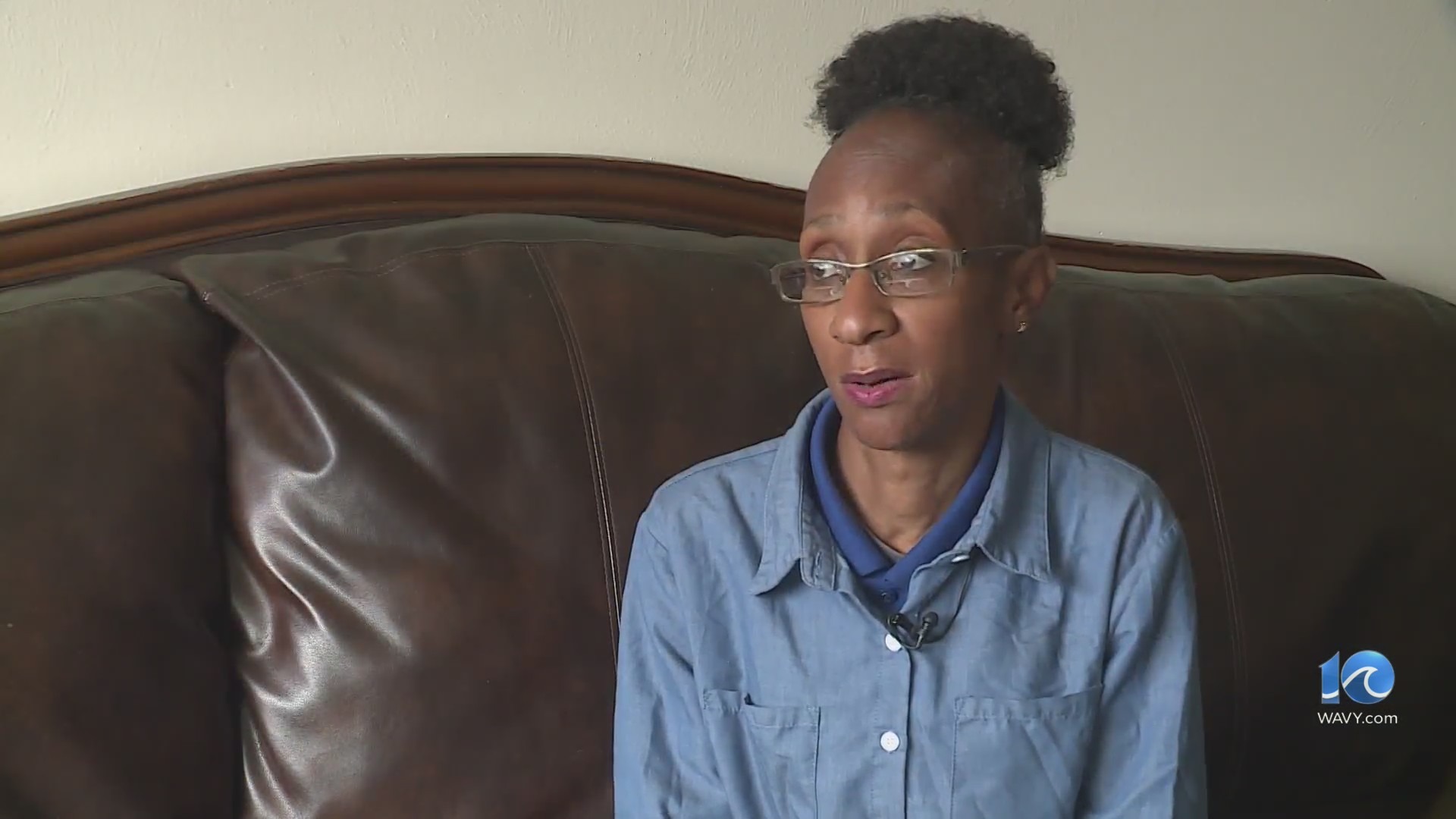

We gave him a sample of a report with Chris Horne on camera from June 2023. Then, he took a sample of the voice of WAVY anchor/reporter Regina Mobley, had his software learn and synthesize her voice, and then replaced Horne’s voice with Mobley’s. The pacing and inflection matched the mannerisms 100%.

Shin also demonstrated how to spot deepfakes. Often, the lighting on the replacement face doesn’t match the surroundings, and the eyeline doesn’t match so it looks as though the new face isn’t looking in the right direction.

Shin said the FBI has been warning about people using these types of AI technologies to scam people, so beware when you think Junior is calling Grandma claiming he’s in trouble.

“Somebody could take took a voice sample of the grandson for example and say “Grandma I am in trouble, please send Walmart gift cards,” Shin said. “The fear is synthetic media, or deepfake media, may get the avalanche effect of popularity before it gets identified as synthetic media,” he warned, and said it will take collaboration among ethicists, religious leaders, legal scholars and law enforcement just to name a few to keep deepfakes in check.